Welcome back!!! We are at Part 6 of the blog series on vSphere supervisor networking with NSX and AVI, where we discuss the topology and routing considerations for vSphere namespaces with a dedicated T0 gateway. If you missed the previous articles on namespace topologies with supervisor network inheritance and with custom Ingress / Egress networks, please check them out below and I recommend reading them before proceeding with this article:

Part 4: vSphere namespace with network inheritance

https://vxplanet.com/2025/05/15/vsphere-supervisor-networking-with-nsx-and-avi-part-4-vsphere-namespace-with-network-inheritance/

Part 5: vSphere namespace with custom Ingress and Egress network

https://vxplanet.com/2025/05/16/vsphere-supervisor-networking-with-nsx-and-avi-part-5-vsphere-namespace-with-custom-ingress-and-egress-network/

Let’s get started:

vSphere Namespace with Dedicated T0 Gateway

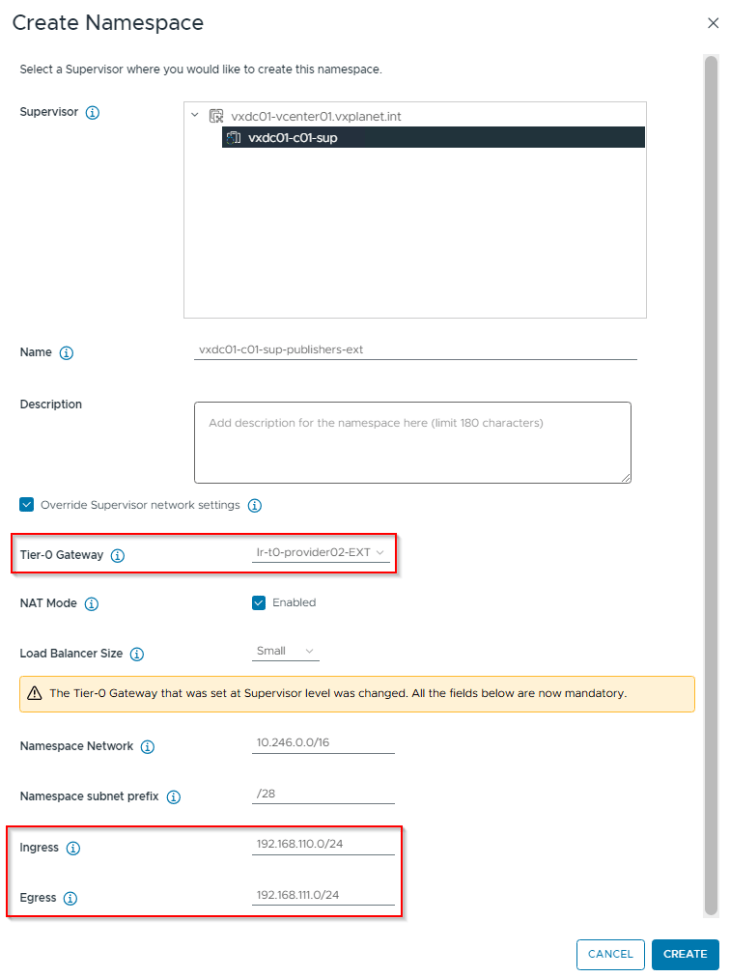

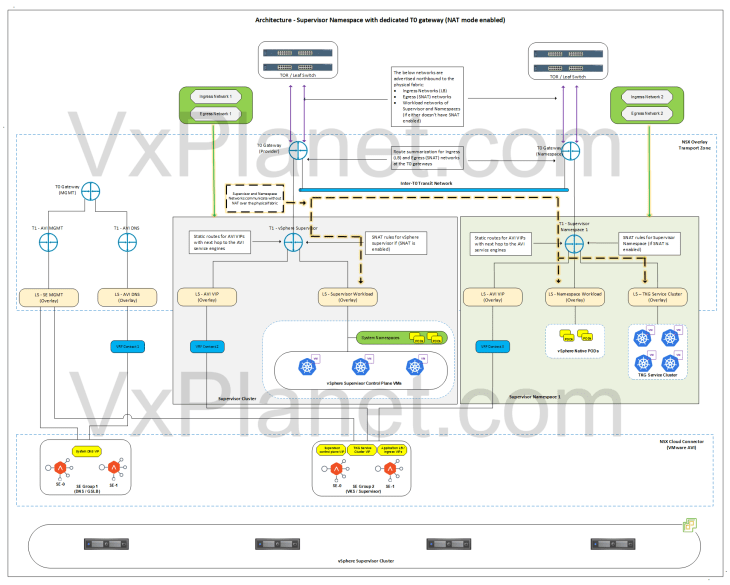

In this vSphere namespace topology, we will override all the network settings from the vSphere supervisor, because overriding the T0 gateway at the namespace level will require unique values for namespace network, ingress and egress CIDRs.

- T0 Gateway

- Workload / Namespace CIDR

- Ingress CIDR

- Egress CIDR (if NAT mode is enabled)

- NAT mode

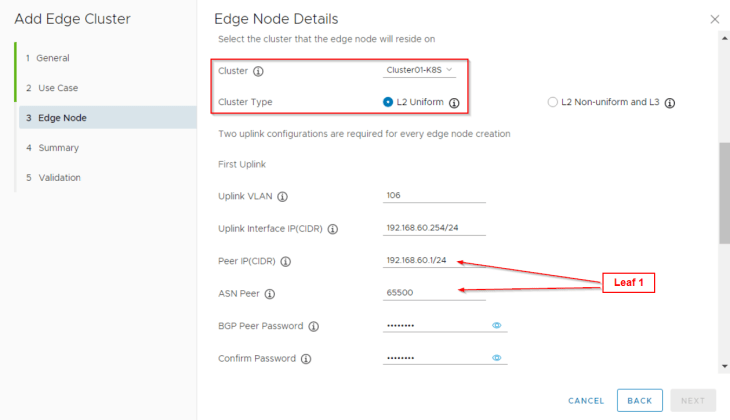

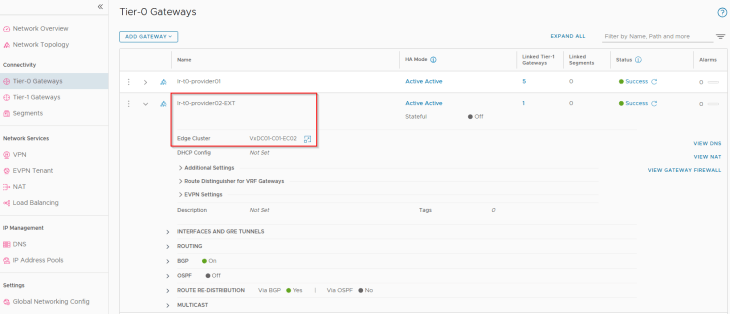

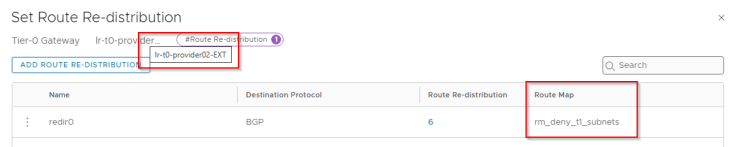

We have a new T0 gateway “lr-t0-provider02-EXT” created on a new edge cluster “VxDC01-C01-EC02” that will be used for a fictitious publishing environment. This T0 gateway has BGP peering with dedicated upstream appliances to perform application publishing to a partner organization over a different routing path, just an use case.

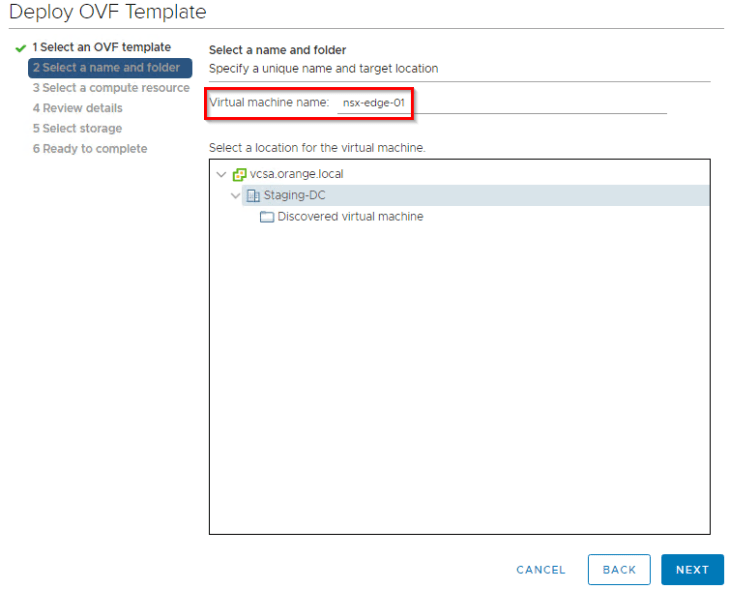

Let’s login to vCenter Workload Management and create a vSphere namespace for the publishing environment, making sure the flag for “Override supervisor network settings” is checked, the custom T0 gateway is selected and that the fields for namespace network, Ingress and Egress CIDR are updated with custom values.

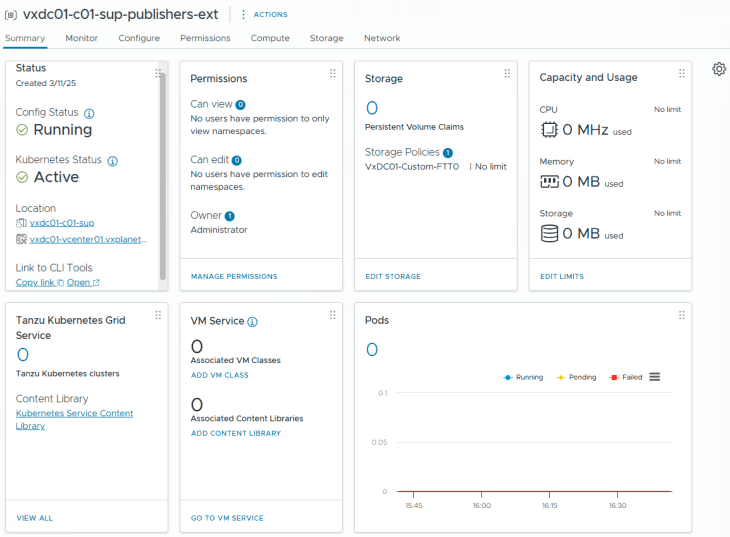

After basic namespace configuration (permissions, storage class, VM Class assignments etc,) we should have the vSphere namespace up and running.

Reviewing NSX objects

Now let’s review the NSX objects that are created by the workflow.

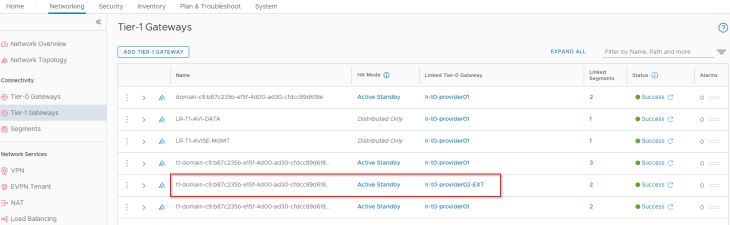

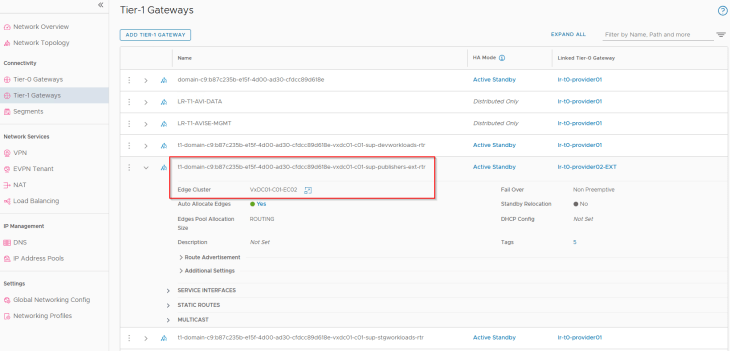

As discussed in Part 1 – Architecture and Topologies, a dedicated T1 gateway is created for the vSphere namespace, however this T1 gateway is up streamed to the custom T0 gateway “lr-t0-provider02-EXT “ that was specified during the namespace creation workflow.

This T1 gateway is instantiated on the same edge cluster as the custom T0 gateway “VxDC01-C01-EC02”.

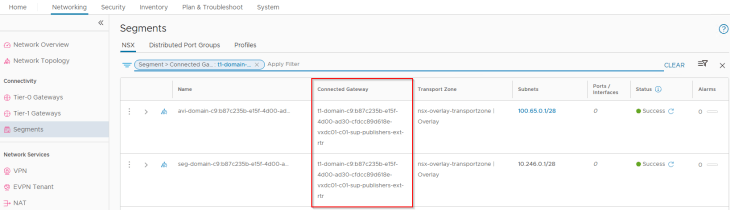

Similar to the previous topologies, two default segments are created, both attached to the namespace T1 gateway. We also have additional dedicated segments for each TKG service cluster that is created under the vSphere namespace, all up streamed to the same namespace T1 gateway.

- Namespace network : This is where the vSphere pods attach to. This network is carved out from the namespace network specified in the vSphere namespace creation workflow (10.246.0.0/16)

- AVI data segment : This is a non-routable subnet on the CGNAT range and has DHCP enabled. This is where the AVI SE data interfaces attach to. A dedicated interface on the SEs from the same SE Group for the supervisor will be consumed for each vSphere namespace that is created.

- TKG Service cluster network: This is where TKG service clusters attach to. One segment will be created for each TKG service cluster. This network is carved out from the namespace network (10.246.0.0/16) specified in the vSphere namespace creation workflow.

Note that for this article, we haven’t deployed any TKG service clusters as we don’t have any networking changes specific to TKG service clusters to highlight, but we have an interesting section on routing considerations towards the end of this article which must be accomplished before spinning up any TKG service clusters.

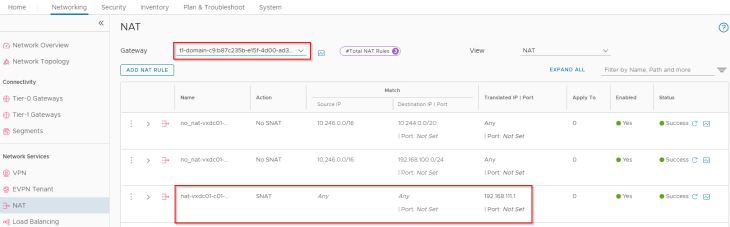

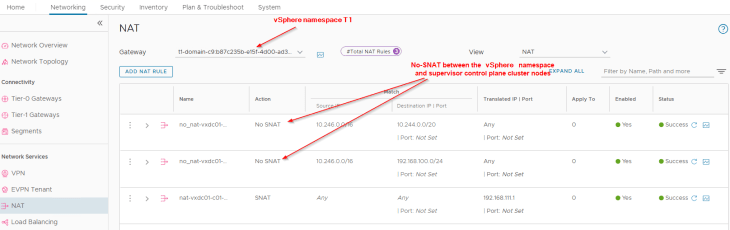

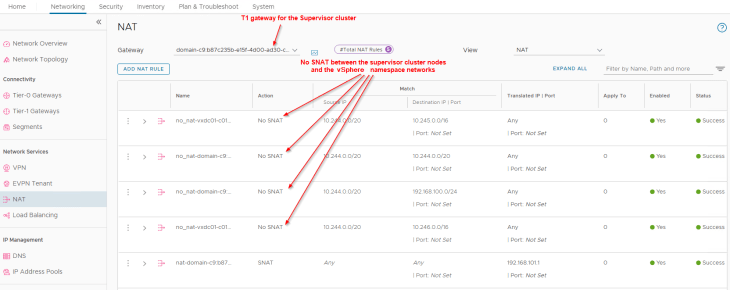

Since we have created the vSphere namespace with NAT mode enabled, we have an SNAT rule created under the namespace T1 gateway for outbound communication. This SNAT IP is taken from the Egress CIDR that we specified during the namespace creation workflow. Note that east-west communication between supervisor and vSphere namespaces will always happen with No-SNAT (We should see no-SNAT rules under the T1 gateways of supervisor and vSphere namespaces).

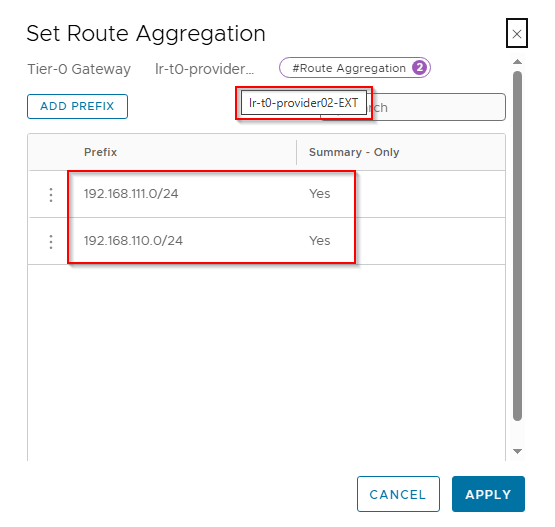

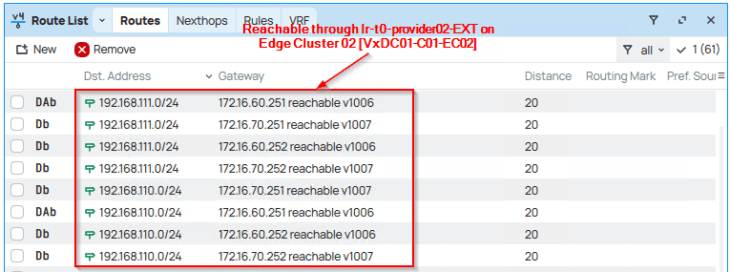

Because we advertised the new Ingress and Egress networks from the custom T0 gateway with route aggregation, we see the summary route in the TOR physical fabrics.

Reviewing AVI objects

Now let’s review the AVI objects that are created by the workflow.

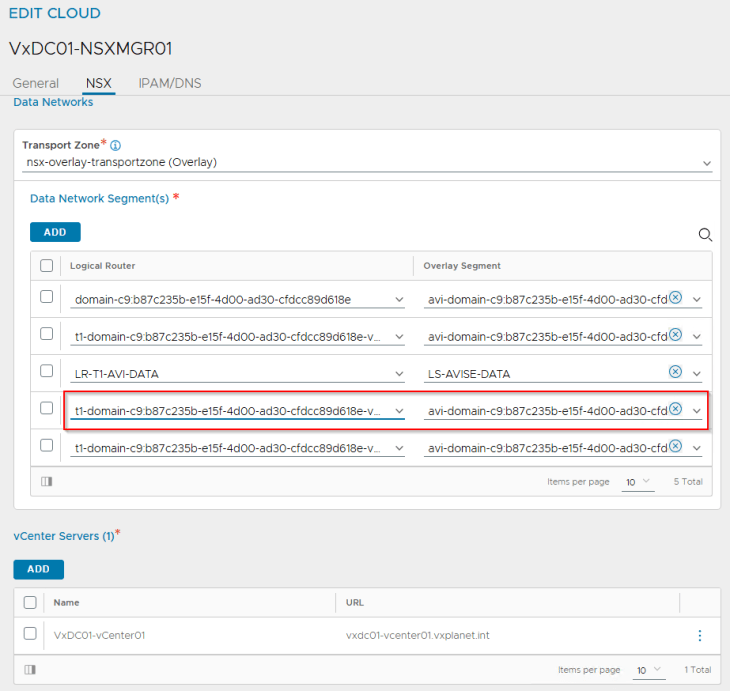

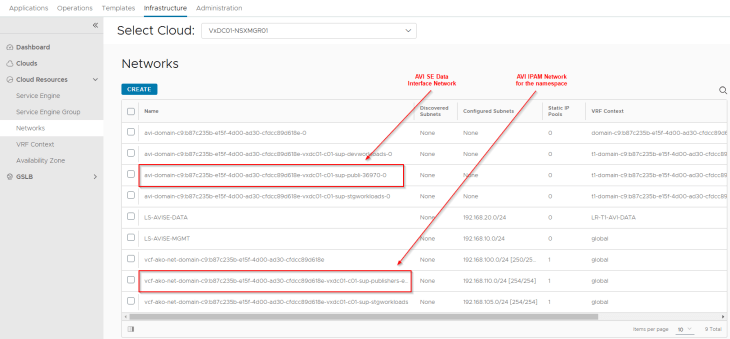

The dedicated T1 gateway created for the vSphere namespace and the respective AVI data segment will be added by the workflow as data networks to the AVI cloud connector.

We will see two networks:

- AVI data network: This is where the AVI SE data interface for this vSphere namespace attach to. DHCP is enabled on this data network (through segment DHCP in NSX)

- VIP network: Because we are overriding the network settings from the supervisor with a custom Ingress network, we should see a new IPAM network created and added to the NSX cloud connector.

In the IPAM profile, we should now see three networks:

- one for the supervisor and all vSphere namespaces inhering network settings from the supervisor, which we discussed in Part 3 and Part 4

- the second for vSphere namespaces with a custom Ingress network, which we discussed in Part 5

- and the third for vSphere namespaces with a custom T0 gateway and Ingress network, which we created in this article.

Routing considerations between vSphere supervisor and vSphere namespace with custom T0 gateway

One of the routing requirements between the vSphere namespaces and the vSphere supervisor is that they need to be able to communicate with each other without NAT. Hence if this requirement is not met, we wont be able to successfully deploy a TKG service cluster in the vSphere namespace.

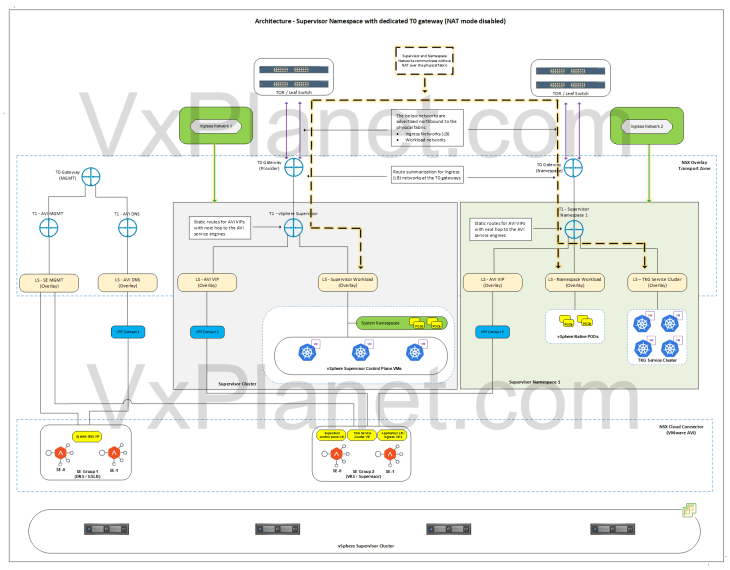

We have the below scenarios where the vSphere supervisor and vSphere namespaces with custom T0 gateway is deployed:

- Both vSphere supervisor and vSphere namespace are in no-NAT mode

- vSphere supervisor is in no-NAT mode and vSphere namespace is in NAT mode

- vSphere supervisor is in NAT mode and vSphere namespace is in no-NAT mode

- Both vSphere supervisor and vSphere namespace are in NAT mode

Scenario 1 (Both vSphere supervisor and vSphere namespace in no-NAT mode)

Because NAT mode is not used in supervisor and namespaces, supervisor workload network, namespace network and TKG cluster networks are advertised northbound from their respective T0 gateways to the physical fabrics. Routing between the vSphere namespace and the vSphere supervisor happens on the physical fabrics without SNAT, as shown in the below topology, and hence no additional routing configuration is required.

Scenario 2 (Both vSphere supervisor and vSphere namespace OR either of them in NAT mode)

In this scenario, the physical fabrics doesn’t have reachability information to either of them or both and requires additional routing configuration to allow no-SNAT communication between the vSphere namespaces and the vSphere supervisor.

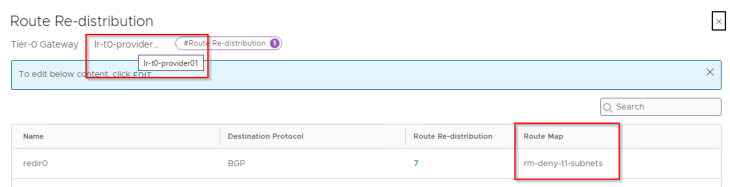

For a vSphere namespace deployed in NAT mode, we see no-SNAT rules configured under the respective namespace T1 gateway, and a route-map applied to the custom T0 gateway that filters the namespace networks from getting advertised to the physical fabrics.

If the vSphere supervisor is deployed in NAT mode, we see no-SNAT rules configured under the supervisor T1 gateway to all the vSphere namespaces, and a route-map filter on the provider T0 gateway.

Because the vSphere namespace networks and vSphere supervisor networks are under different T0 gateways, how do they communicate to each other?

We have multiple options, and will adopt the solution that is easy to implement and scalable:

Solution 1: Route over the fabrics

Exclude the vSphere namespace or supervisor networks from the NSX route filter and allow it to route over the physical fabrics. This approach is not scalable, and defeats the purpose of NAT.

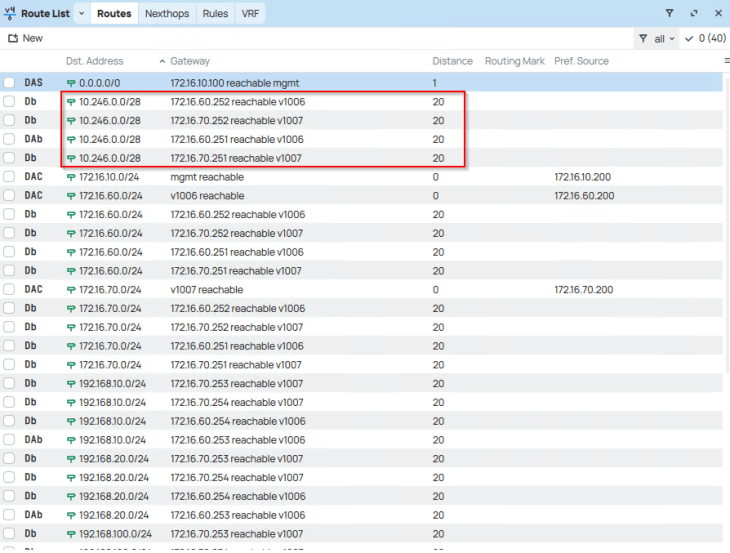

For example, below is a scenario where we have advertised the vSphere namespace networks (10.246.0.0/16) to the physical fabrics and configured a static route on the physical fabrics to the supervisor network (10.244.0.0/20), so that they are routed outside of the T0 gateway.

Solution 2: GRE tunnels

This requires a loopback interface from the T0 gateways to be advertised across the physical fabrics and adds dependencies / complexities, hence we won’t implement this. For more information on setting up GRE tunnels, please check out one of my articles below:

https://vxplanet.com/2024/07/19/nsx-gre-tunnels-part-1-bgp-over-gre/

Solution 3: Inter-T0 Transit Link with static routes

Because all the T0 gateways are under the same overlay transport zone, this will be an easy and scalable solution to implement, without additional dependency on the physical fabrics, as shown in the below topology.

Let’s implement this:

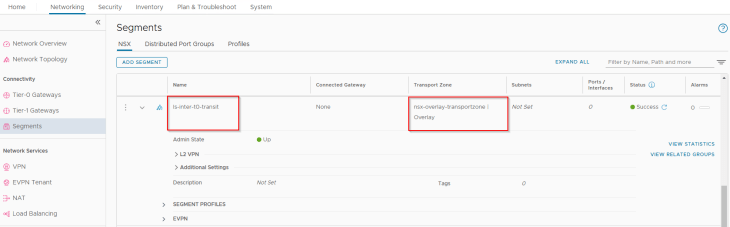

We will create an isolated overlay segment (on the same overlay transport zone where the supervisor cluster is prepared with)

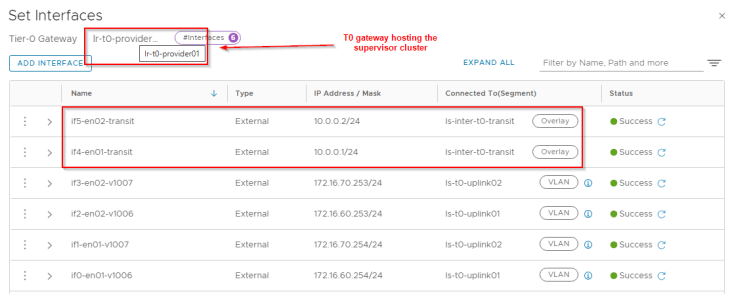

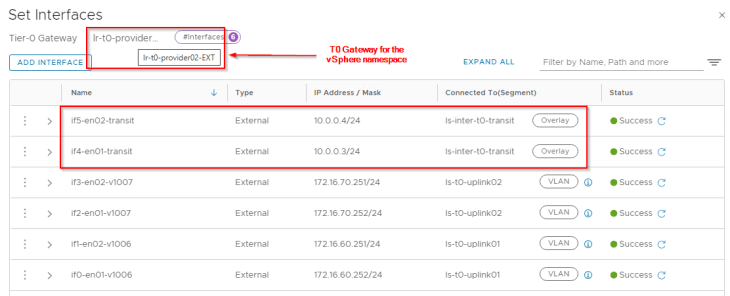

We will add this segment as external interfaces to both T0 gateways (Supervisor T0 and vSphere namespace T0).

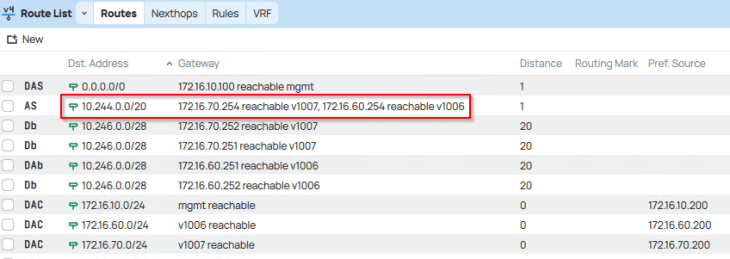

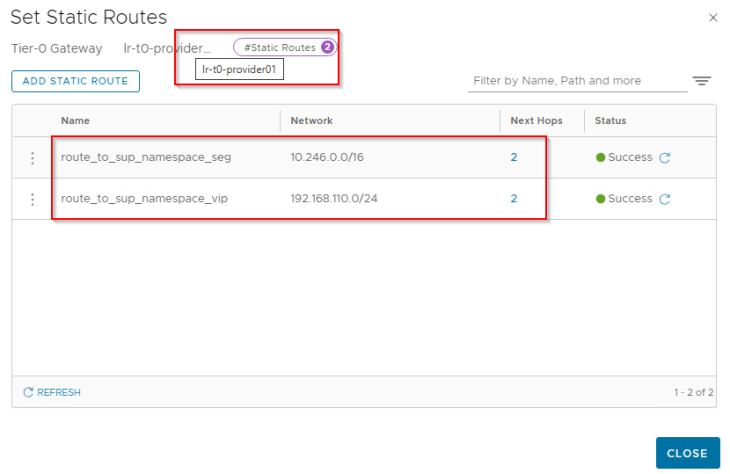

Now, we configure static routes so that supervisor networks and vSphere namespace networks can route over the Inter-T0 transit link. Below are the static routes configured on the supervisor T0 to reach the vSphere namespace networks and it’s VIP network on the namespace T0 gateway.

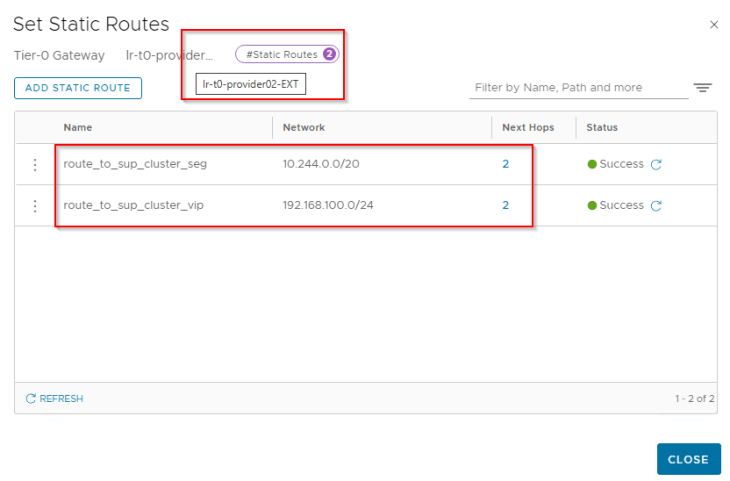

And below are the static routes configured on the namespace T0 gateway to reach the supervisor network and VIP network on the supervisor T0 gateway.

This will establish connectivity between the supervisor networks and vSphere namespace networks. At this moment, we should be good to deploy TKG service clusters in the vSphere namespace.

If you are still reading, Congratulations, we have reached the end of this article. We have one more vSphere namespace topology to cover, and will continue in Part 7 where we discuss about vSphere namespaces on dedicated T0 VRF gateway. Stay tuned!!!

I hope this article was informative. Thanks for reading

Continue reading? Here are the other chapters of this series:

Part 1: Architecture and Topologies

https://vxplanet.com/2025/04/16/vsphere-supervisor-networking-with-nsx-and-avi-part-1-architecture-and-topologies/

Part 2: Environment Build and Walkthrough

https://vxplanet.com/2025/04/17/vsphere-supervisor-networking-with-nsx-and-avi-part-2-environment-build-and-walkthrough/

Part 3: AVI onboarding and Supervisor activation

https://vxplanet.com/2025/04/24/vsphere-supervisor-networking-with-nsx-and-avi-part-3-avi-onboarding-and-supervisor-activation/

Part 4: vSphere namespace with network inheritance

https://vxplanet.com/2025/05/15/vsphere-supervisor-networking-with-nsx-and-avi-part-4-vsphere-namespace-with-network-inheritance/

Part 5: vSphere namespace with custom Ingress and Egress network

https://vxplanet.com/2025/05/16/vsphere-supervisor-networking-with-nsx-and-avi-part-5-vsphere-namespace-with-custom-ingress-and-egress-network/