Welcome back!!! I finally managed to scale out my home lab with an additional vSphere cluster to support the topologies for multiple vSphere supervisors with NSX and AVI. The following two chapters (Part 8 and Part 9) are going to be interesting as we discuss about vSphere supervisor topologies at scale covering two design options:

- vSphere supervisors on shared NSX transport zone, and

- vSphere supervisors on dedicated NSX transport zones

This is Part 8, and we will discuss the first scenario – vSphere supervisors on shared NSX transport zone.

Let’s get started:

If you were not following along, please scroll down to the bottom of this article where I have provided links to the previous chapters of this blog series.

vSphere supervisors on shared NSX transport zone

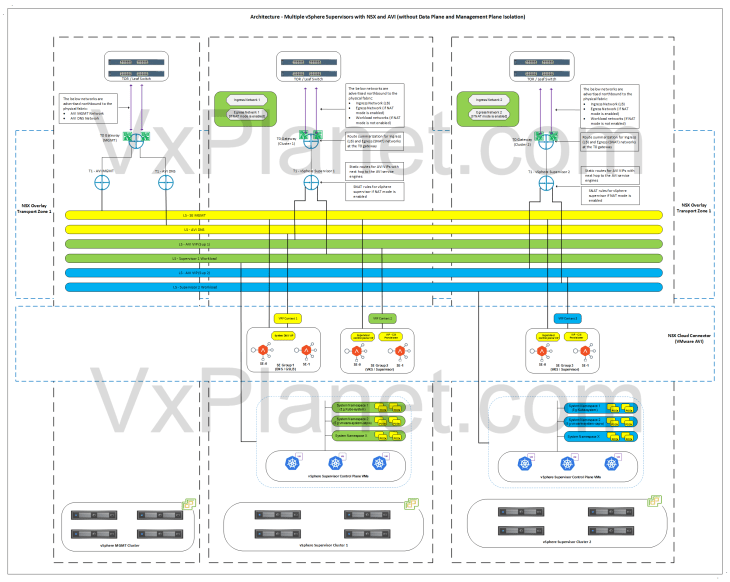

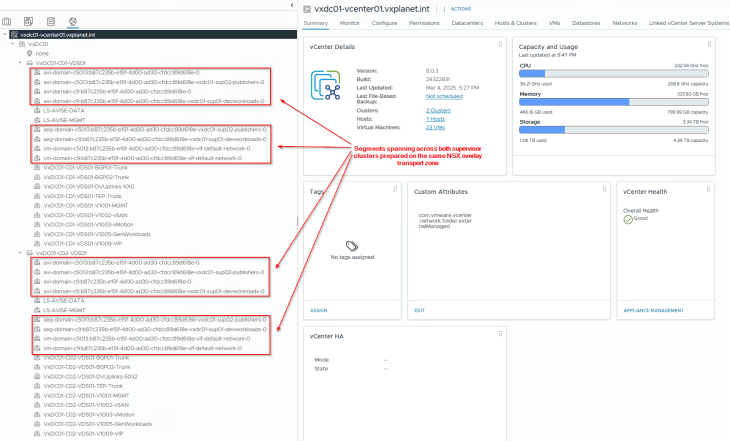

As discussed in Part 1 – Architecture and Topologies, below is the network architecture of two vSphere supervisors on a shared NSX overlay transport zone:

Below is a recap of what we discussed in Part 1:

- In this architecture with a shared overlay transport zone, there is no network isolation across vSphere clusters as the segments created for a supervisor span across and are consumable by all the vSphere clusters that are part of this overlay transport zone.

- Depending on the use-case, the T0 gateway can be either:

- A single shared T0 gateway for multiple supervisors: Here, the T0 edge cluster will be placed on a shared management & edge vSphere cluster or on a dedicated edge vSphere cluster

- A dedicated T0 gateway for each supervisor: Here, the T0 edge cluster will be co-located with each supervisor cluster. We will be implementing this topology in this article.

- Each supervisor will have dedicated service & pod CIDRs, namespace networks, ingress networks and egress networks

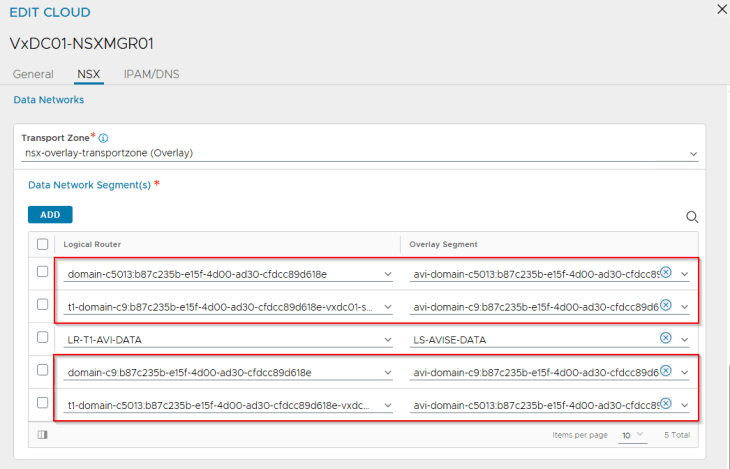

- Because a single NSX overlay transport zone is used, both supervisors will use the same NSX cloud connector in AVI

- A dedicated SE Group will be used per supervisor.

- Like the previous articles, we will have a dedicated T1 gateway up streamed to a dedicated management T0 gateway to handle AVI SE management traffic.

- Optionally, a dedicated SE Group will be used to host the AVI system DNS service. This is used for the AVI DNS profile to support Ingress services of TKG service clusters.

Let’s implement this:

Current Environment Walkthrough

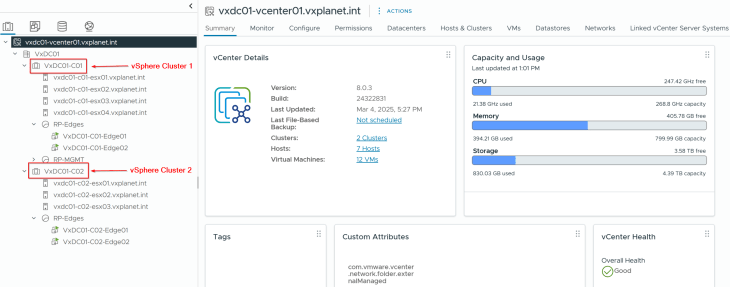

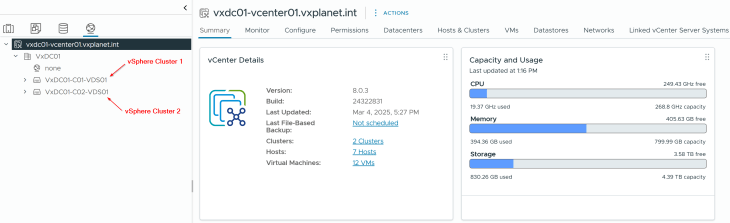

vSphere walkthrough

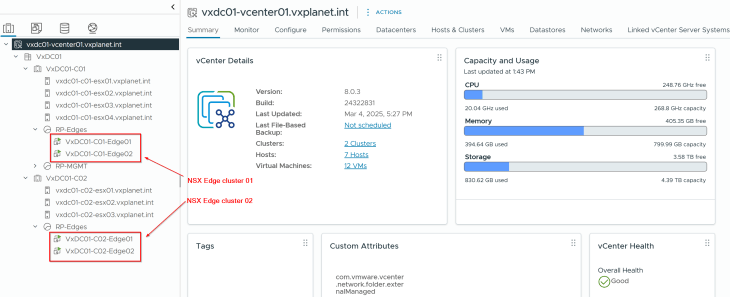

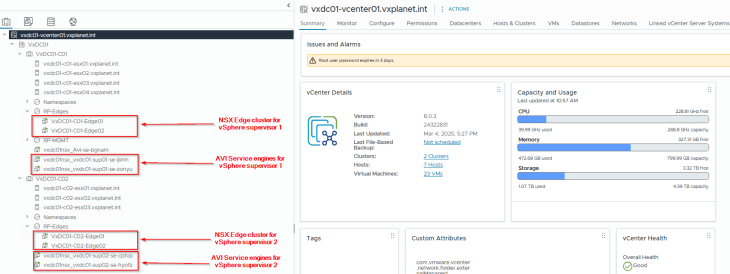

I am re-using the same vSphere environment but with the addition of a new vSphere cluster VxDC01-C02. All the pre-requisites for supervisor activation (including AVI onboarding workflow) are in place. Please check out Part 2 and Part 3 for more details.

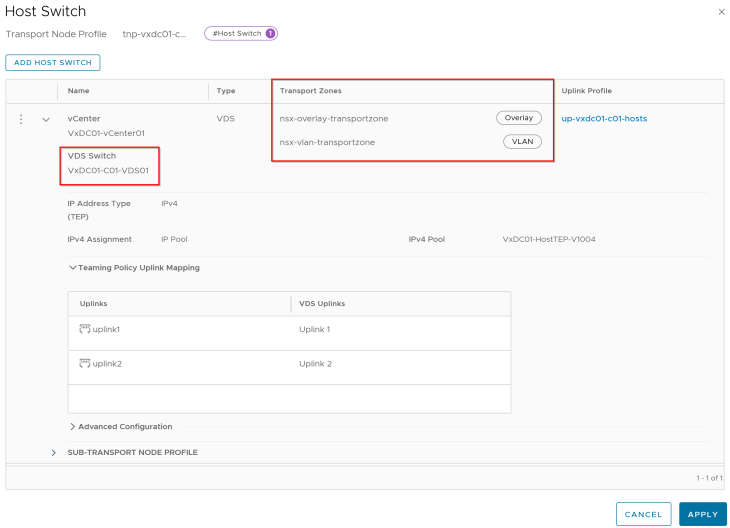

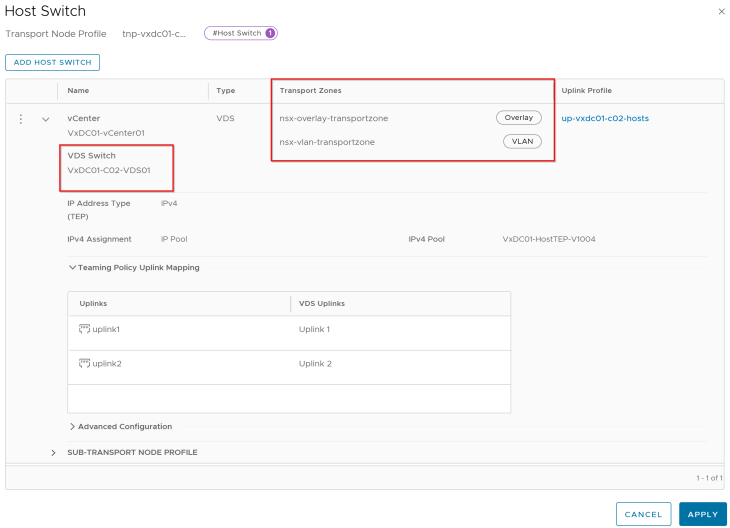

Both vSphere clusters are on dedicated vCenter VDS.

Note: If you are using a shared VDS across both vSphere clusters, make sure both vSphere clusters are prepared for NSX using the same transport node profile.

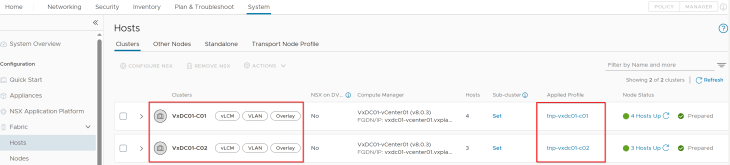

NSX walkthrough

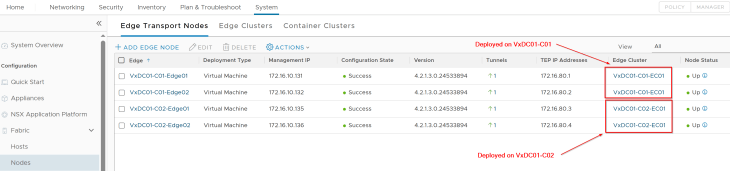

Both vSphere clusters VxDC01-C01 and VxDC01-C02 are prepared for the same NSX overlay transport zone using dedicated transport node profiles.

We have dedicated NSX edge clusters for each vSphere supervisor.

- VxDC01-C01-EC01: This is the dedicated edge cluster for vSphere supervisor 1 and is co-located with the same vSphere cluster VxDC01-C01.

- VxDC01-C02-EC01: This is the dedicated edge cluster for vSphere supervisor 2 and is co-located with the same vSphere cluster VxDC01-C02.

Both edge clusters are prepared on the same NSX overlay transport zone as the vSphere clusters.

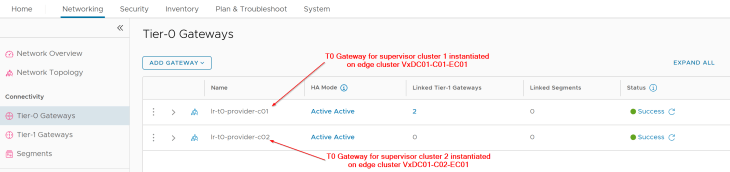

We have dedicated T0 Provider gateways for each vSphere supervisor. Due to limited resources in my home lab, the T0 gateway for supervisor 1 will also handle the management traffic for the AVI SEs and System DNS instead of a dedicated management T0 gateway unlike described in the architecture.

The necessary T0 configurations including BGP peering, route redistribution, route aggregation etc are already in place. Please check out the previous articles to learn more on this configuration.

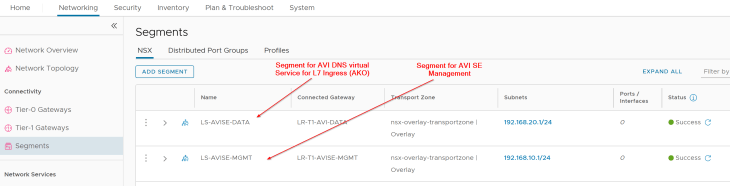

We have two segments created – one for AVI SE management traffic and the other for AVI System DNS traffic, each attached to their respective T1 gateways. The necessary segment and T1 configuration including DHCP, route advertisement etc are already in place.

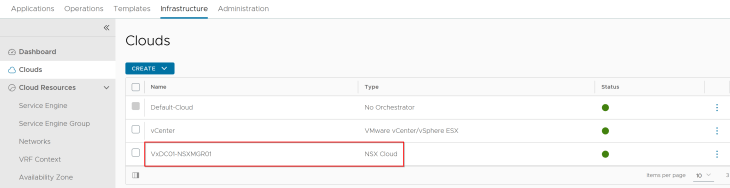

AVI walkthrough

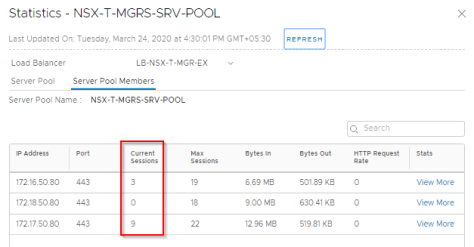

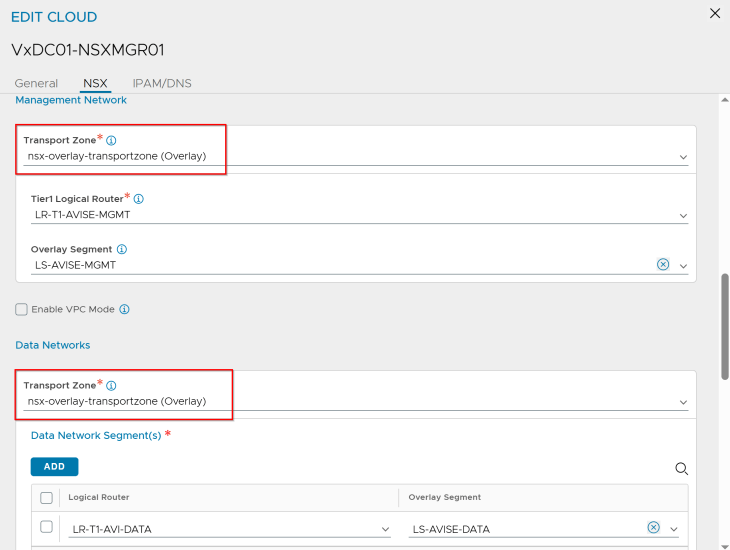

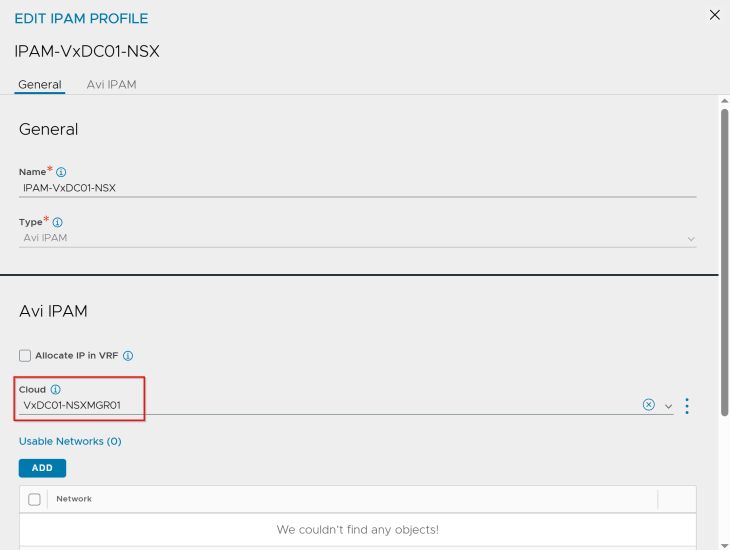

As discussed previously, an NSX overlay transport zone maps as a distinct cloud connector in AVI. As such, we have a single NSX cloud connector for the NSX overlay transport zone where the vSphere clusters are prepared with.

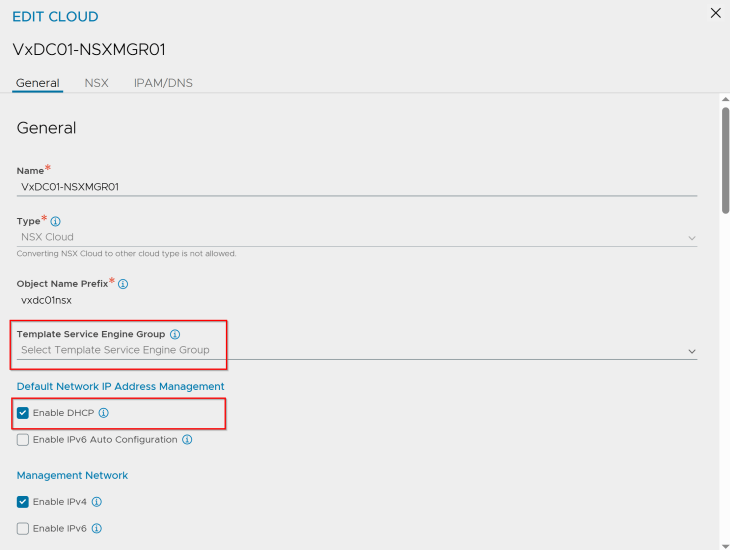

At this moment, we haven’t added a template service engine group under the NSX cloud connector. We will add this later while we activate the vSphere supervisors (because there is a reason for not adding this now).

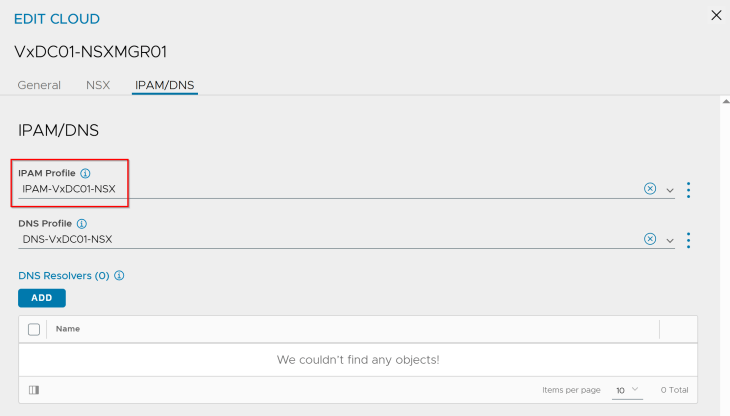

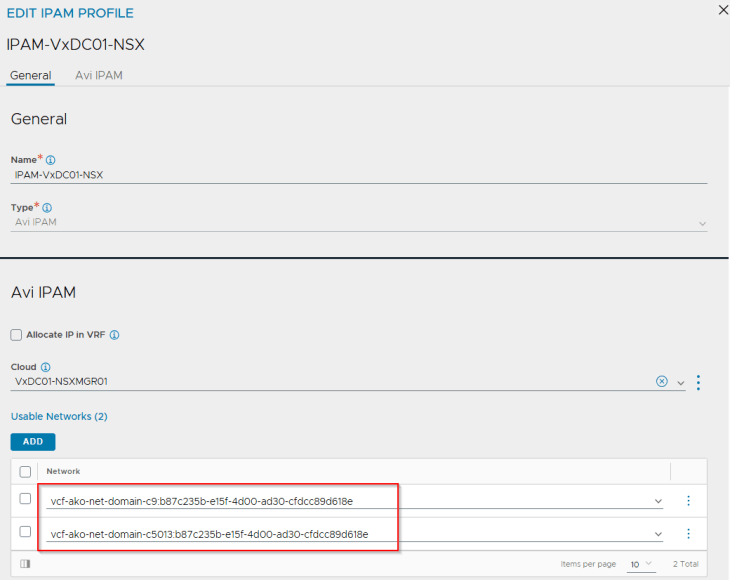

We have IPAM and DNS profiles attached to the cloud connector. This placeholder IPAM profile doesn’t have any networks added, and will be dynamically updated as and when the vSphere supervisors and vSphere namespaces are configured.

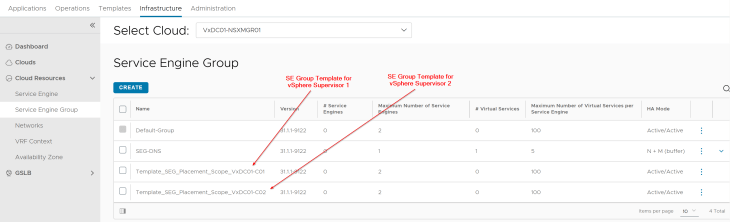

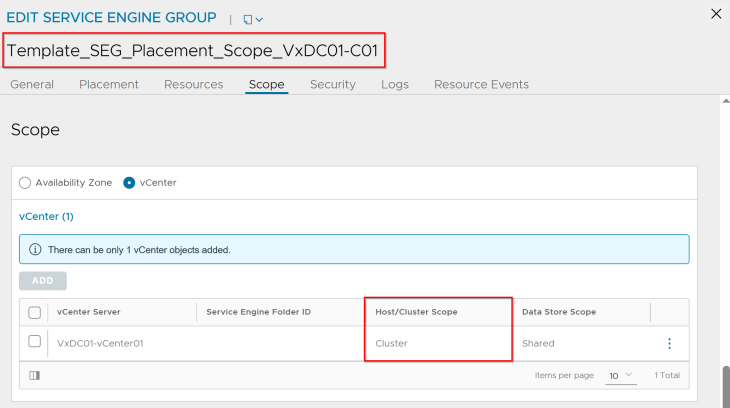

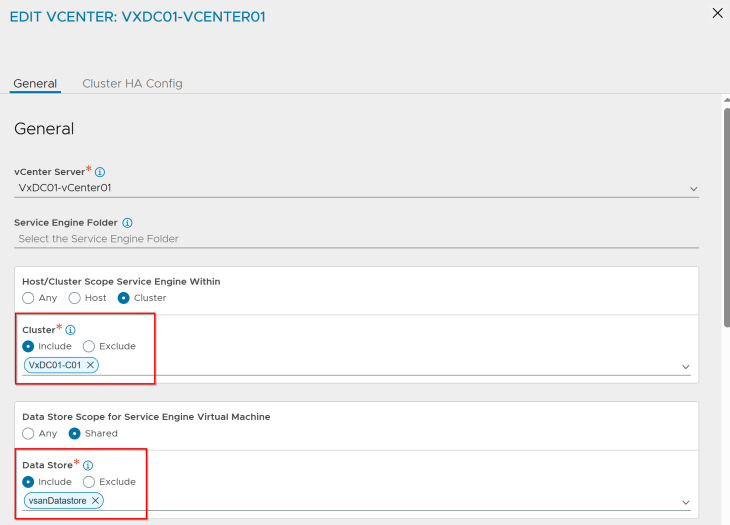

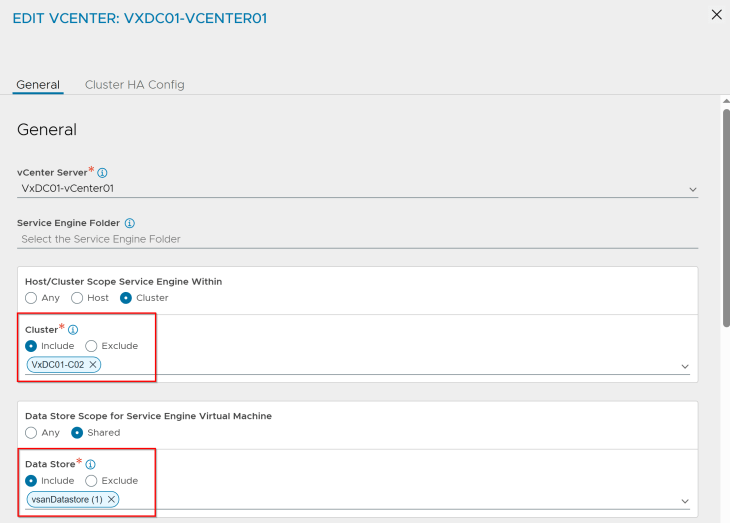

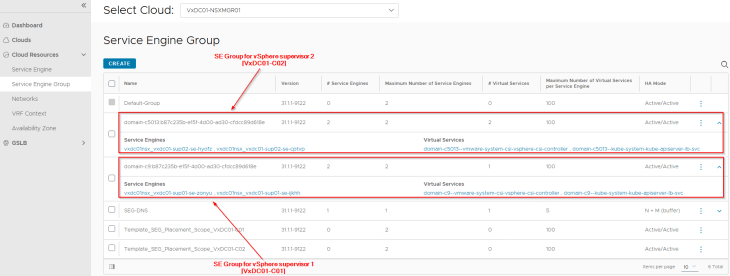

We have two SE Group templates created – one for vSphere supervisor 1 and the other for vSphere supervisor 2. The reason for this is to provide different placement settings for the service engines in vCenter, so that they are co-located on the respective vSphere clusters similar to how the NSX edge nodes are placed.

- SEs for vSphere supervisor 1 will be co-located on the vSphere cluster VxDC01-C01, and

- SEs for vSphere supervisor 2 will be co-located on the vSphere cluster VxDC01-C02

Below is the placement settings for the SE Group template for vSphere supervisor 1:

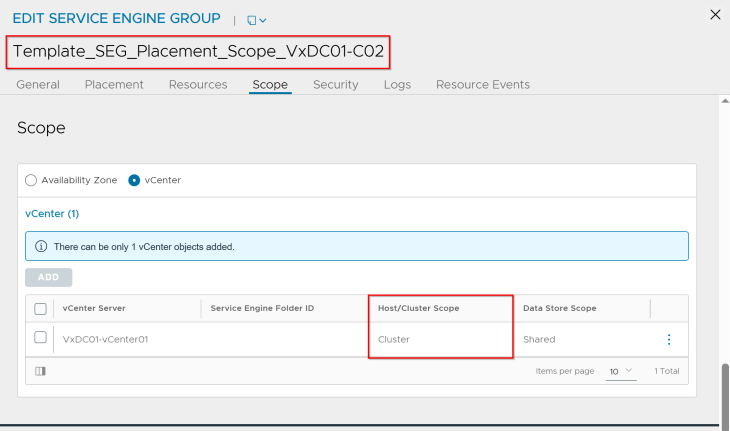

and below is the placement settings for the SE Group template for vSphere supervisor 2:

Okay, now let’s proceed to the activation of vSphere supervisors.

Activating vSphere Supervisor 1 (VxDC01-C01)

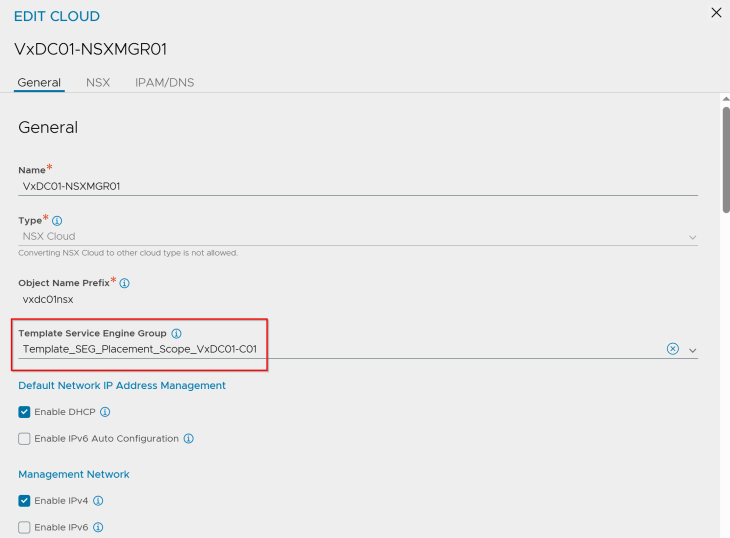

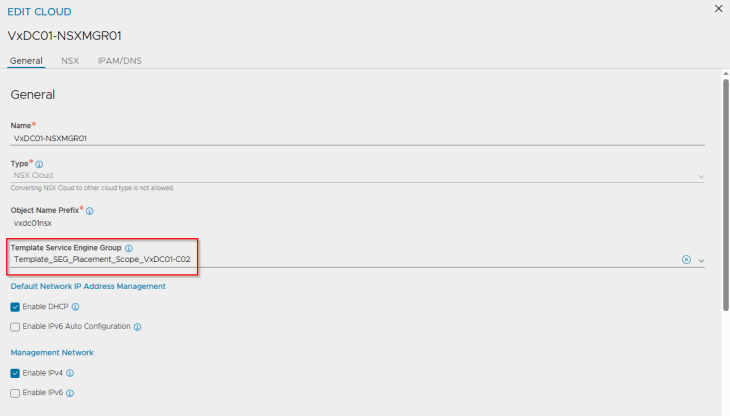

Remember, we missed one setting in the NSX cloud connector properties – The template service engine group. It’s time to add now. Let’s select the template that we prepared with the desired SE placement settings for supervisor 1.

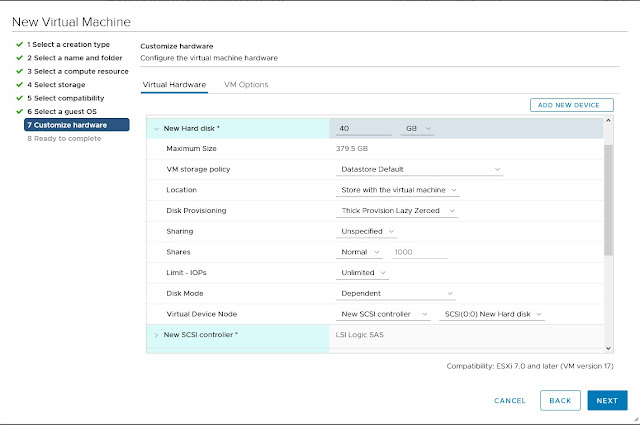

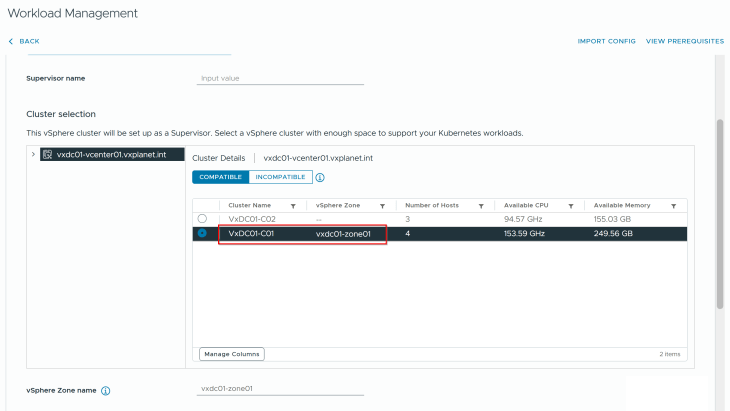

and we will run the supervisor 1 activation workflow.

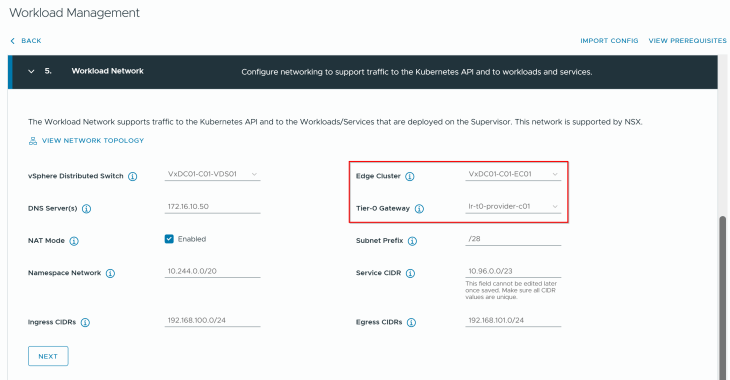

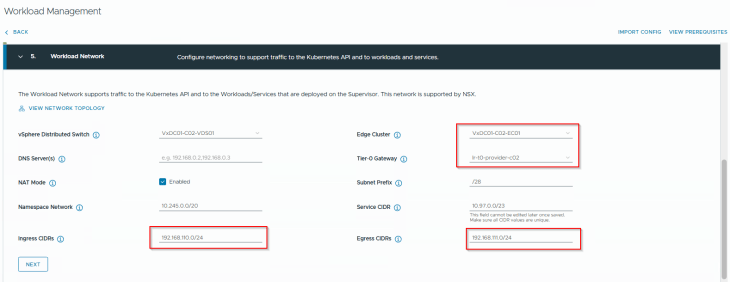

We will select the edge cluster “VxDC01-C01-EC01” and the T0 gateway “lr-t0-provider-c01” that is meant for this supervisor.

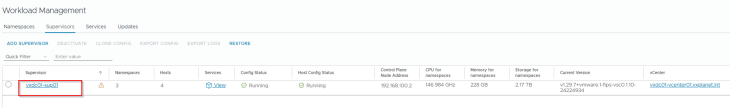

Success!!! vSphere supervisor 1 activation workflow has succeeded.

Activating vSphere Supervisor 2 (VxDC01-C02)

Now let’s edit the NSX cloud connector and update the template service engine group settings with the second template that we prepared with the desired SE placement settings for supervisor 2.

Note: Do not change the NSX cloud connector settings while vSphere supervisor 1 activation is in progress. Also do not activate vSphere supervisor 2 while vSphere supervisor 1 activation is in progress, as this will wrongly inherit the template SE group settings and place the supervisor 2 SEs in the wrong vSphere cluster.

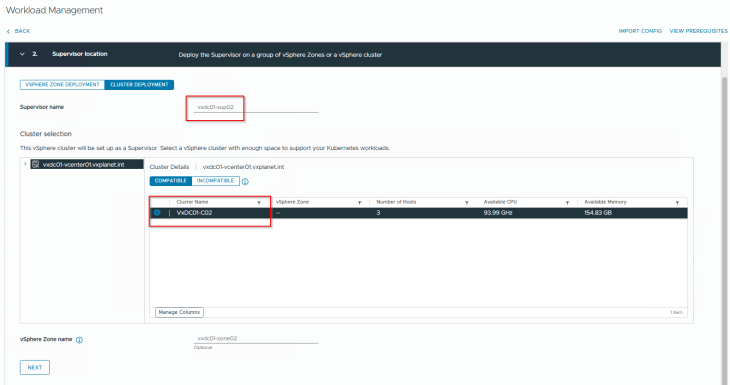

Let’s repeat the same workflow for supervisor 2 activation.

We will select the edge cluster “VxDC01-C02-EC01” and the T0 gateway “lr-t0-provider-c02” that is meant for this supervisor.

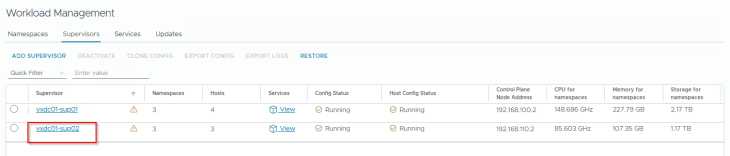

and finally, we should have both the supervisors up and running.

Reviewing NSX objects

Now let’s review the NSX objects that are created by the workflows.

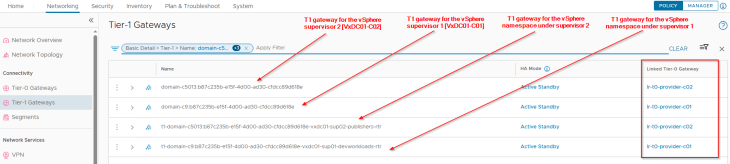

As discussed in previous chapters, dedicated T1 gateways are created for vSphere supervisor and vSphere namespaces. These T1 gateways are up streamed to their respective T0 gateways, as shown below:

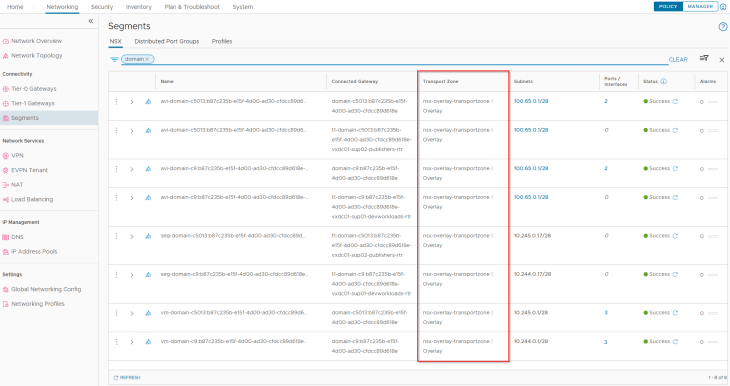

The supervisor workload segments, namespace segments, TKG service cluster segments and the AVI data segments for both vSphere supervisors are created on the same overlay transport zone, that spans across both vSphere clusters. As such, we don’t have data plane isolation for the vSphere supervisor networks.

Reviewing AVI objects

Now let’s review the AVI objects that are created by the workflows.

The T1 gateways created for both the vSphere supervisors and the respective AVI data segments are added by the workflows as data networks to the NSX cloud connector.

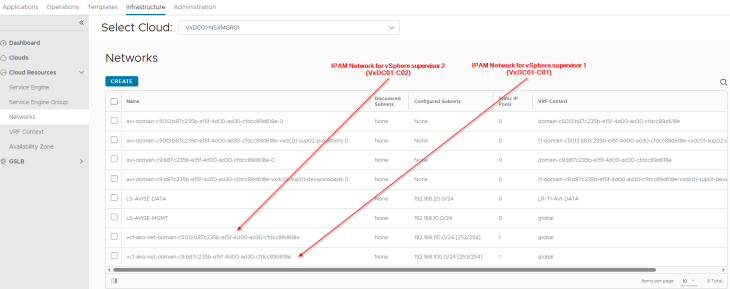

We have two IPAM networks created under the NSX cloud connector that maps to the Ingress CIDR defined in the supervisor activation workflows.

These IPAM networks are added dynamically to the IPAM profile for the NSX cloud connector.

We also see that two SE Groups are created, one for each vSphere supervisor that are cloned from the respective template SE groups and inherited the correct SE placement settings.

Let’s review the vCenter inventory and confirm that the SEs are placed as expected.

Success!!! We have now validated the architecture for multiple vSphere supervisors on a shared NSX overlay transport zone.

At scale, I personally prefer the next design option (Part 9) where we have dedicated NSX overlay transport zones per supervisor as this can provide the necessary data plane isolation and better manageability of the networks. We will discuss this next.

I hope this article was informative. Thanks for reading!!!

Continue reading? Here are the other chapters of this series:

Part 1: Architecture and Topologies

https://vxplanet.com/2025/04/16/vsphere-supervisor-networking-with-nsx-and-avi-part-1-architecture-and-topologies/

Part 2: Environment Build and Walkthrough

https://vxplanet.com/2025/04/17/vsphere-supervisor-networking-with-nsx-and-avi-part-2-environment-build-and-walkthrough/

Part 3: AVI onboarding and Supervisor activation

https://vxplanet.com/2025/04/24/vsphere-supervisor-networking-with-nsx-and-avi-part-3-avi-onboarding-and-supervisor-activation/

Part 4: vSphere namespace with network inheritance

https://vxplanet.com/2025/05/15/vsphere-supervisor-networking-with-nsx-and-avi-part-4-vsphere-namespace-with-network-inheritance/

Part 5: vSphere namespace with custom Ingress and Egress network

https://vxplanet.com/2025/05/16/vsphere-supervisor-networking-with-nsx-and-avi-part-5-vsphere-namespace-with-custom-ingress-and-egress-network/

Part 6: vSphere namespace with dedicated T0 gateways

https://vxplanet.com/2025/05/16/vsphere-supervisor-networking-with-nsx-and-avi-part-6-vsphere-namespace-with-dedicated-t0-gateway/

Part 7: vSphere namespace with dedicated VRF gateways

https://vxplanet.com/2025/05/20/vsphere-supervisor-networking-with-nsx-and-avi-part-7-vsphere-namespace-with-dedicated-t0-vrf-gateway/

Part 9: Multiple supervisors on dedicated NSX transport zones

https://vxplanet.com/2025/06/08/vsphere-supervisor-networking-with-nsx-and-avi-part-9-multiple-supervisors-on-dedicated-nsx-transport-zones/

Part 10: Zonal supervisor with AVI availability zones

https://vxplanet.com/2025/06/12/vsphere-supervisor-networking-with-nsx-and-avi-part-10-zonal-supervisor-with-avi-availability-zones/