NSX introduced multitenancy through the concept of projects in version 4.0.1 (API only). Multitenancy was enhanced with project UI options in NSX 4.1 and with Virtual Private Clouds (VPCs) in NSX 4.1.1.

NSX projects are a way to isolate networking and security objects across tenants within the same NSX deployment. Prior to projects, we dealt with networking and security configurations in the default space which was owned by the NSX Enterprise Administrator. Each tenant (project) has RBAC policies enforced by the Enterprise administrator from the default space, and as such each project has one or more project admins who has complete access to the project configuration, and project users who can provision & consume networking and security objects for their workloads. By default, each tenant (project) has access to the objects that they create and those shared from the default space by the enterprise administrator. Objects in one project cannot be accessed by users in a different project.

NSX Virtual Private Clouds (VPCs) introduced in NSX 4.1.1, are an extension to multitenancy where each project can further isolate the objects and offer a simplified self-service consumption model to the application owners and users. VPCs hide the usual networking and security constructs with a simplified consumer friendly provisioning portal. VPCs are configured within the context of a project and each VPC represents an independent routing domain.

In this six-part blog series, we will cover a lot on NSX multitenancy, please follow along. Here is the breakdown:

Part 1: Introduction & Multitenancy models

Part 2: NSX Projects

Part 3: NSX Virtual Private Clouds (VPCs)

Part 4: Stateful Active-Active Gateways in Projects

Part 5: Edge cluster considerations and failure domains

Part 6: Integration with NSX Advanced Load balancer

Let’s get started:

NSX Multitenancy models

Whenever a project is created, the project admins and users create the necessary T1 gateways and associated segments for their workloads. T0 Gateways and edge clusters cannot be created inside a project, they are shared to projects by the Enterprise Administrator from the default space.

Based on how the Provider T0 Gateways are leveraged by the projects and the level of data plane isolation, we have the below multi-tenancy models:

- Multitenancy with shared Provider T0 / VRF Gateway

- Multitenancy with dedicated Provider T0 / VRF Gateway

- Multitenancy with isolated data plane (Not yet supported)

Depending on how the edge clusters are shared to projects, we have two approaches which we will discuss in more detail in Part 5:

- Shared edge cluster from the Provider T0 / VRF Gateway

- Dedicated edge cluster for projects

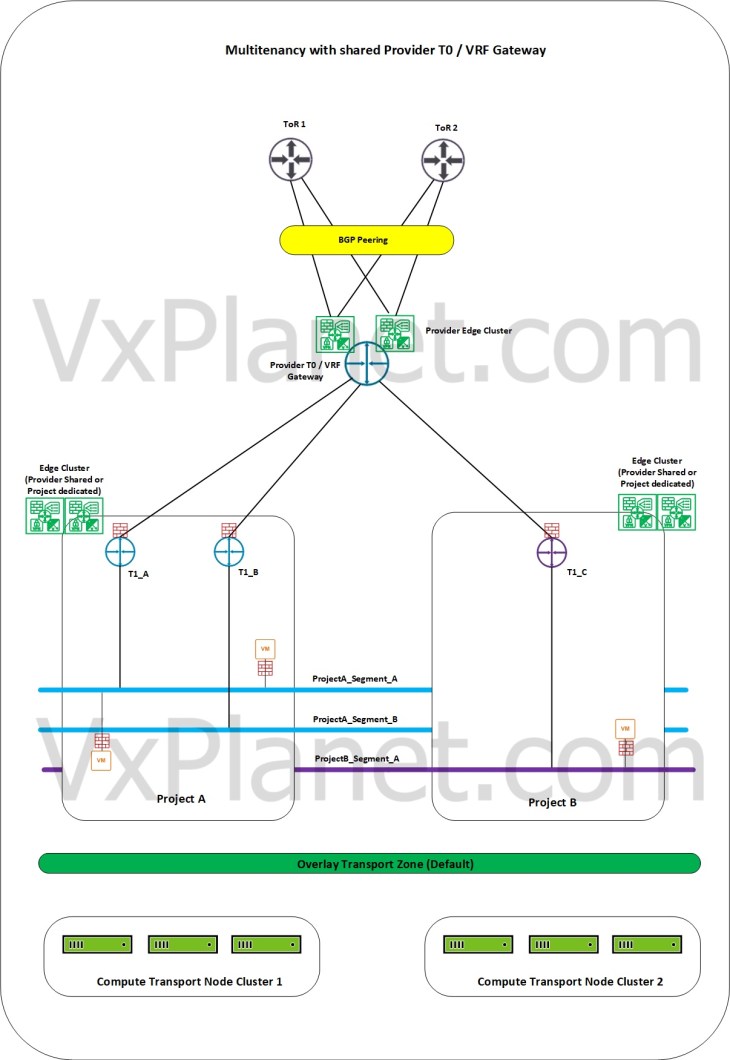

Multitenancy with shared Provider T0 / VRF Gateway

In this model, the Provider T0 / VRF Gateway is shared across multiple NSX projects. Each project can either share the edge cluster from the provider or can have dedicated edge clusters for their stateful services. The project workloads (VMs) reside on dedicated or shared transport node clusters prepared on the same overlay transport zone (default Overlay TZ) and as such, there is no data plane isolation between the projects. Currently only the “default” overlay transport zone is supported for projects. This means that the segments created on one project are realized on all the compute clusters in the domain, but are kept hidden from the view of other projects at the management plane level.

All tenant traffic will be routed over the same provider edge nodes and physical fabric for N-S connectivity. Optionally Enterprise Admins can implement route filtering for the projects to ensure that only approved project networks are advertised northbound from the projects.

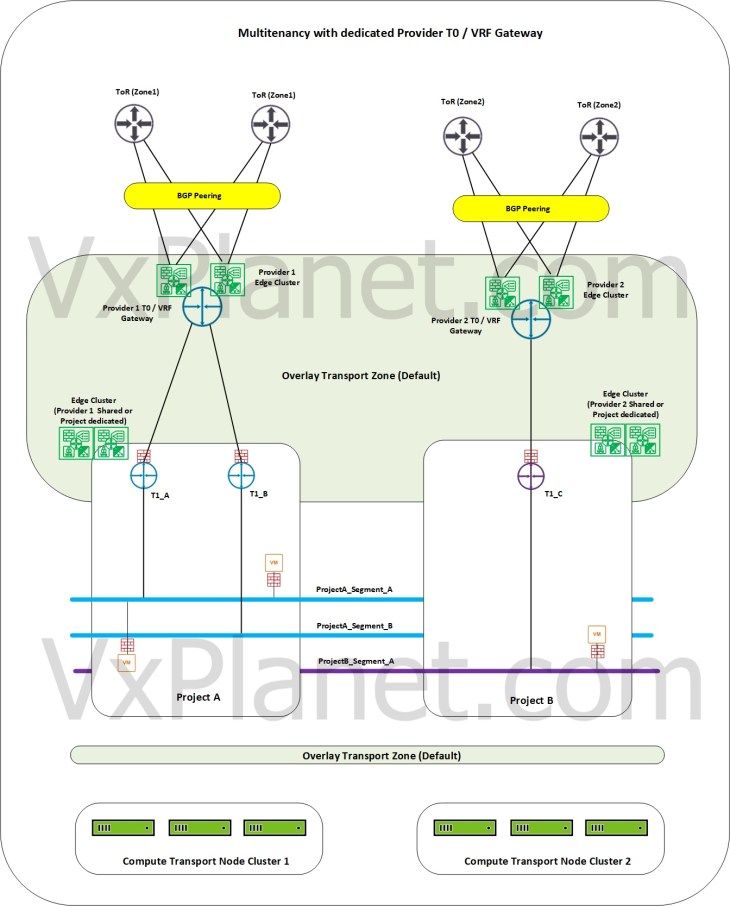

Multitenancy with dedicated Provider T0 / VRF Gateway

In this model, each NSX Project will be assigned with a dedicated Provider T0 or VRF gateway. Projects can either share the edge cluster from the provider or can have dedicated edge clusters for their stateful services. This model consumes additional compute resources if dedicated T0 gateways are assigned (for hosting additional NSX Edge nodes). This model is useful especially for project workloads which needs to be hosted on specific physical zones or require ingress / egress traffic via specific physical fabrics (firewalls / gateways).

The project workloads (VMs) reside on dedicated or shared transport node clusters prepared on the same default overlay transport zone and the segments created on a project are realized on all the compute clusters in the domain, but are kept hidden from the view of other projects at the management plane level.

Tenant traffic will have dedicated provider path for N-S connectivity. Optionally Enterprise Admins can implement route filtering for the projects to ensure that only approved project networks are advertised northbound from the projects.

Note that dedicating a Provider T0 / VRF gateway to a project is NOT a hard enforcement. It’s only a design convention and the same Provider T0 / VRF gateway can still be shared with other Projects too.

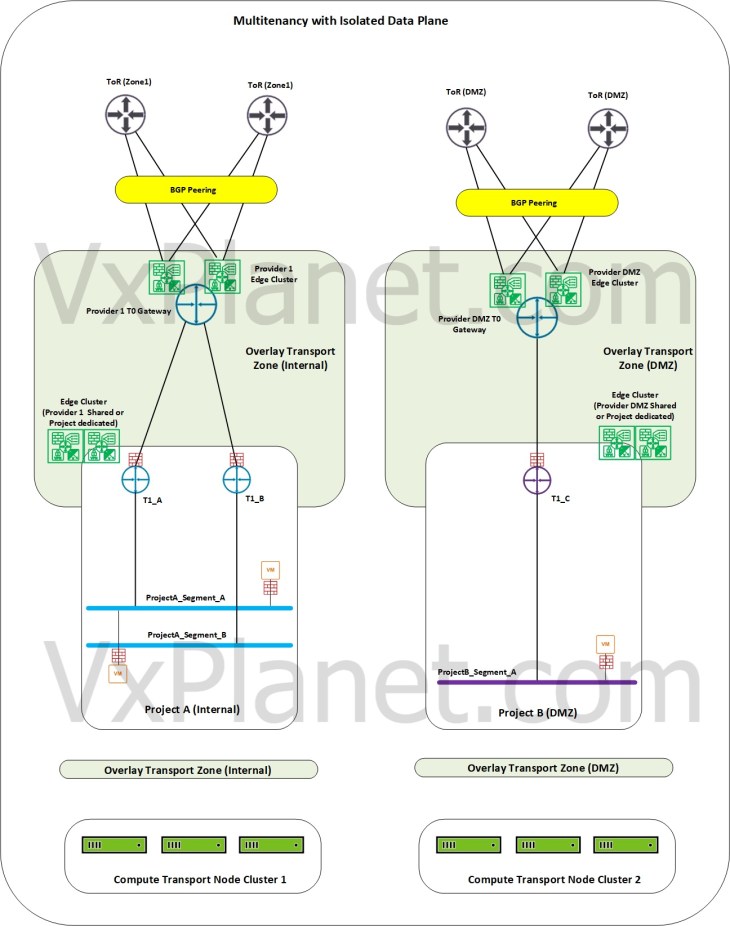

Multitenancy with Isolated data plane

Note : This model is not yet supported, as we can’t add a custom non-default overlay transport zone to a project. A project always uses the default overlay transport zone to provision segments. Hence this model is my personal assumption.

In this model, each project will be assigned with a dedicated Provider T0 gateway on a dedicated overlay transport zone. For eg: we can have a project that is on the internal overlay transport zone hosted on an internal compute vsphere cluster and another project that is on the DMZ overlay transport zone hosted on a DMZ vsphere compute cluster. This ensures complete data plane isolation between the projects and the underlying compute infrastructure.

The segments created on one project will be realized only on the compute clusters that are prepared on the same overlay transport zone.

Let’s break for now and will continue in Part 2 where we will configure NSX projects, assign RBAC policies to projects, apply quotas, create networking and security objects, apply route filtering and more.

Stay tuned!!!

I hope the article was informative. Thanks for reading.

Continue reading? Here are the other parts of this series:

Part 2 – NSX Projects :

https://vxplanet.com/2023/10/24/nsx-multitenancy-part-2-nsx-projects/

Part 3 – Virtual Private Clouds (VPCs)

https://vxplanet.com/2023/11/05/nsx-multitenancy-part-3-virtual-private-clouds-vpcs/

Part 4 : Stateful Active-Active Gateways in Projects

https://vxplanet.com/2023/11/07/nsx-multitenancy-part-4-stateful-active-active-gateways-in-projects/

Part 5 : Edge Cluster Considerations and Failure Domains

https://vxplanet.com/2024/01/23/nsx-multitenancy-part-5-edge-cluster-considerations-and-failure-domains/

Part 6 : Integration with NSX Advanced Load balancer

https://vxplanet.com/2024/01/29/nsx-multitenancy-part-6-integration-with-nsx-advanced-load-balancer/